Evaluating and enhancing the performance of AI models is a critical process in ensuring they deliver accurate, reliable, and contextually relevant responses. This involves systematically testing the model against a variety of tasks to identify strengths and weaknesses. A structured and iterative approach ensures that the AI system is robust and aligned with project-specific objectives.

A good example would be a project requiring the creation and evaluation of prompts for image-based tasks, with a particular emphasis on market research and data analysis. For instance, let’s say the project involves analyzing election data displays and testing the model’s ability to interpret numerical information accurately. The following sections outline a structured approach for executing projects of this nature.

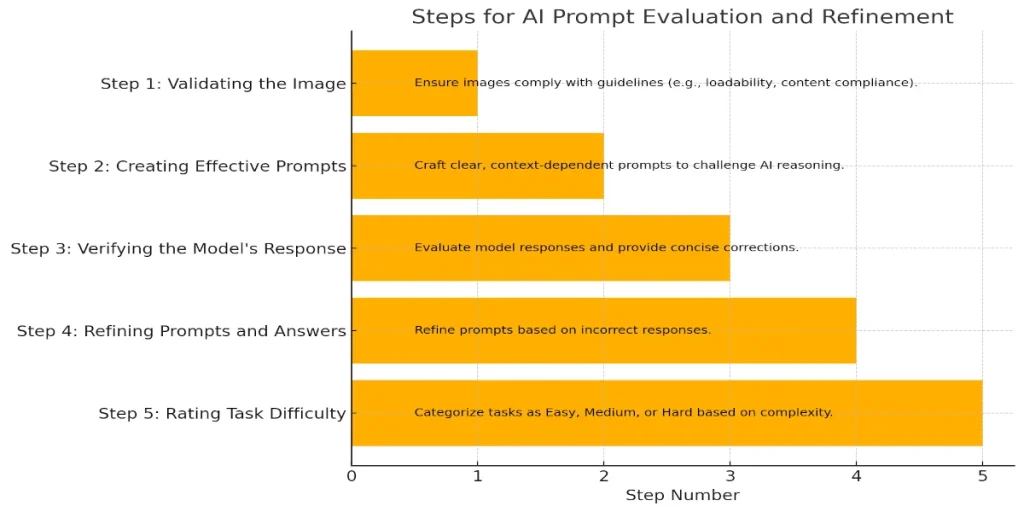

Step 1: Validating the Image

The first step in projects of this type involves ensuring that the provided images comply with established guidelines. In the context of the election data project, images were assessed for:

- Loadability: Images that failed to load or display correctly were marked invalid.

- Content Compliance: Any sensitive information (e.g., voter addresses or ID numbers) had to be flagged.

- Language Appropriateness: Non-English content was excluded from the scope.

- Contextual Relevance: Images had to provide sufficient information to formulate meaningful prompts. For instance, a blurry or incomplete electoral district chart would be marked invalid with an explanation.

Step 2: Creating Effective Prompts

Once an image is validated, the next step would be to craft prompts that effectively challenge the AI model. High-quality prompts must:

- Depend on the Image: The question should not be answerable without the image’s context.

- Challenge the Model: Questions should test the model’s reasoning and comprehension skills.

- Avoid Ambiguity: Clarity in phrasing is essential to ensure accurate interpretation.

- Follow Best Practices: Prompts should not rely on previous ones, cannot be multiple-choice, and must adhere to proper grammar.

Example Prompts

For the election data display scenario above, prompts should be designed as follows:

- “What is the total voter turnout in District 2?”

- “Which candidate received the highest percentage of votes in District 3?”

- “Compare the votes received by the top two parties in District 4.”

These prompts challenge the model to interpret both numerical and categorical data accurately, reflecting the demands of real-world applications.

Step 3: Verifying the Model’s Response

After submitting a prompt, the model’s response is evaluated meticulously:

- If the response is correct, a second prompt is crafted to push the model further, aiming to identify failure points within three iterations.

- If the response is incorrect, the accurate answer is provided concisely (5-8 words) without reasoning steps. For example:

- Incorrect Answer: “Candidate A won District 3.”

- Correct Answer: “Candidate B won District 3.”

Clear explanations of why the response is incorrect are documented to guide future refinements.

Step 4: Refining Prompts and Answers

Incorrect model outputs are used as opportunities for refinement. In the election data project, feedback loops are particularly valuable for improving model interpretation of numerical data, ensuring more robust responses in future iterations.

Are you interested in iterative AI prompt refinement? or optimizing AI for data analysis? Elite Data Labs is here to get you started.

Step 5: Rating Task Difficulty

Each task is then categorized based on complexity:

- Easy: Solvable in under two minutes, requiring basic understanding of the data (e.g., “Which district has the lowest voter turnout?”).

- Medium: Tasks requiring more detailed analysis, taking two to five minutes (e.g., “What is the average vote difference between the top two candidates across all districts?”).

- Hard: Involving advanced reasoning or multi-step calculations, requiring more than five minutes (e.g., “Identify the districts where the vote margin between the top two candidates exceeds 10% and summarize the total votes cast.”).

Example Use Cases

- Election Data Analysis: Helping government agencies ensure transparency in election results by testing AI models to interpret voter statistics accurately.

- Market Research: Assisting businesses in analyzing survey results or product performance metrics through optimized AI prompts.

- Data Visualization Testing: Ensuring AI can interpret and summarize information from complex charts and graphs.

Challenges and Considerations

Projects like this often present several challenges, including:

- Precision with Numerical Data: Even straightforward prompts involving electoral statistics require meticulous attention to detail.

- Maintaining Objectivity: Prompts have to genuinely test the model’s capabilities without being misleading.

- Domain-Specific Knowledge: For example, understanding electoral processes is crucial for crafting effective prompts in a project of this nature.

- Guideline Ambiguities: Some task guidelines require interpretation or clarification, particularly when handling edge cases.

These insights are critical in overcoming challenges in AI projects or ensuring AI accuracy in specific domains.

Key Takeaways

Suppose you have a project requiring the creation and evaluation of prompts for image-based tasks. A structured approach is essential to ensure success. Clear, well-crafted prompts, rigorous evaluation, and consistent adherence to guidelines ensure accurate assessments of the model’s capabilities. These insights not only improve model reliability but also guide its development for handling complex, real-world applications.

We are here to help with your AI performance evaluation, optimizing your AI for market research, and testing your AI on numerical and visual data. Talk to Elite Data Labs today!